Originally, we set up this site to focus on woodcrafting and painting but as our interests grew, we have increasingly used Raspberry Pis to add motion and life into our work. This post will get you started using Raspberry Pi’s in your creations.

Please note, this material is provided for informational purposes only and is not a guide on how to create the designs. Please take a look at our disclaimer.

Why Raspberry Pi?

- Powerful computing platform with easy-to-use languages.

- Low energy consumption and runs quietly and cooly.

- Rich online support and user base.

- Has 26 pins built in enabling rapid integration with Internet of Things (IoT) technology.

Peripherals

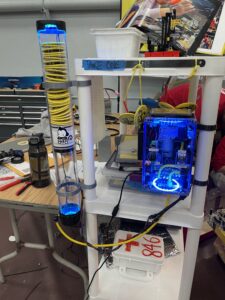

Today, most people developed on a laptop or tablet, but Raspberry Pi’s require old fashion peripherals: power cables, screen, keyboard and mouse. You need to setup a physical development environment and make sure you have all the necessary peripherals. Newer Raspberry Pi uses a Micro HDMI port so you will need a converter. We do a lot of coding on the couch so built a makeshift laptop as seen below.

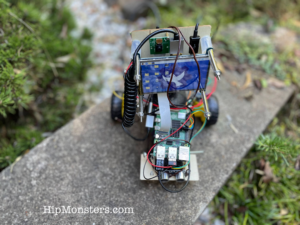

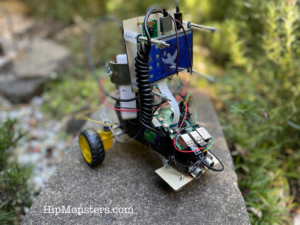

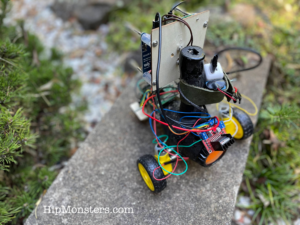

A side view of our Raspberry Pi laptop.

A front view of our laptop.

A mouse can get some to get use to so we recommend a wireless keyboard (seen above) with a built-in trackpad. One plus is the keyboard + trackpad only uses up one USB port.

The Hard Drive

A Raspberry Pi’s OS is stored on a Micro SD. To start we recommend getting two with at least 64 GB. If you do any images or sound the drive fills up fast. You will also need at least two readers. One USB A for the Raspberry Pi when you transfer code and one for your other machine to build the OS image from.

Building the OS Image

You can buy Micro SD cards with built in OS. If you do not have a laptop or desktop that is you only real option. You can also build your own OS image using tool provided by Raspberry Pi. You dan download it here: raspberrypi.com/software.

We recommend modifying the advance setting to pre-configure your login and Wi-Fi password.

Booting the Device

Make sure to use the appropriate power supply as specified by RaspBerryPi. Depending on the version, booting can take a while. Once it has completed booting you should see a screen that looks like most standard desktop environments.

Raspberry Pi’s OS is ARM version of Linux. If you have used Linux most of the standard tools will be available. If you have only used Windows or OSX the environment should seem very familiar. All the desktop environments follow the same basic principles. If you have never used a desktop environment this is a great place to start!

Configuring Your Environment

The keyboard defaults to UK. If you are not in the UK many of the keys will not work as expected. In Preferences, open up the Mouse and Keyboard Setting then click the Keyboard layout button at the bottom. In the combo box choose the appropriate country.

We also recommend a smaller or not image for the background to use less memory.

Developing Your Next Big Thing!

We started using Scratch as a development tool. If that works for you and makes sense keep using it! Here is a link on how to install it on a Raspberry PI.

We have migrated to mow using Python and C++. To write code we use the Geany Programmer’s Editor. It lacks some features of Visual Studio Code (what we develop on in Windows and OSX) but has a light foot print.

Typically, we write code for a Raspberry Pi on both a MacBook and the Raspberry Pi itself. We do find the MacBook is similar enough environment we do not need to change our code too much. If you look at our code in GitHub we you we often have different logic based on which environment the code is run on. Note: there are some packages that only work on Raspberry Pi such as interfaces to sensors. In these sections of the code, we have non-functioning stub if the platform is OSX.

We transfer code using the SD reader. Both OSX and Linux auto-detect SD cards when attacked but with Linux it can take a bit so be patient. Also, sometimes Linux cannot write to large SD card so try a small on first.

Our next post will dive deeper into the basic of programming Python on a Raspberry Pi. For now, if you have never used Linux or a desktop environment we recommend just browsing the Web using Chromium (the open source base to Chrome) to familiarize yourself.

Happy Creating!